Can technology truly strip away reality, leaving behind only a digital echo of what was? The rise of "undress AI" applications poses a complex question, fundamentally altering the landscape of image manipulation and prompting a necessary, critical examination of its implications.

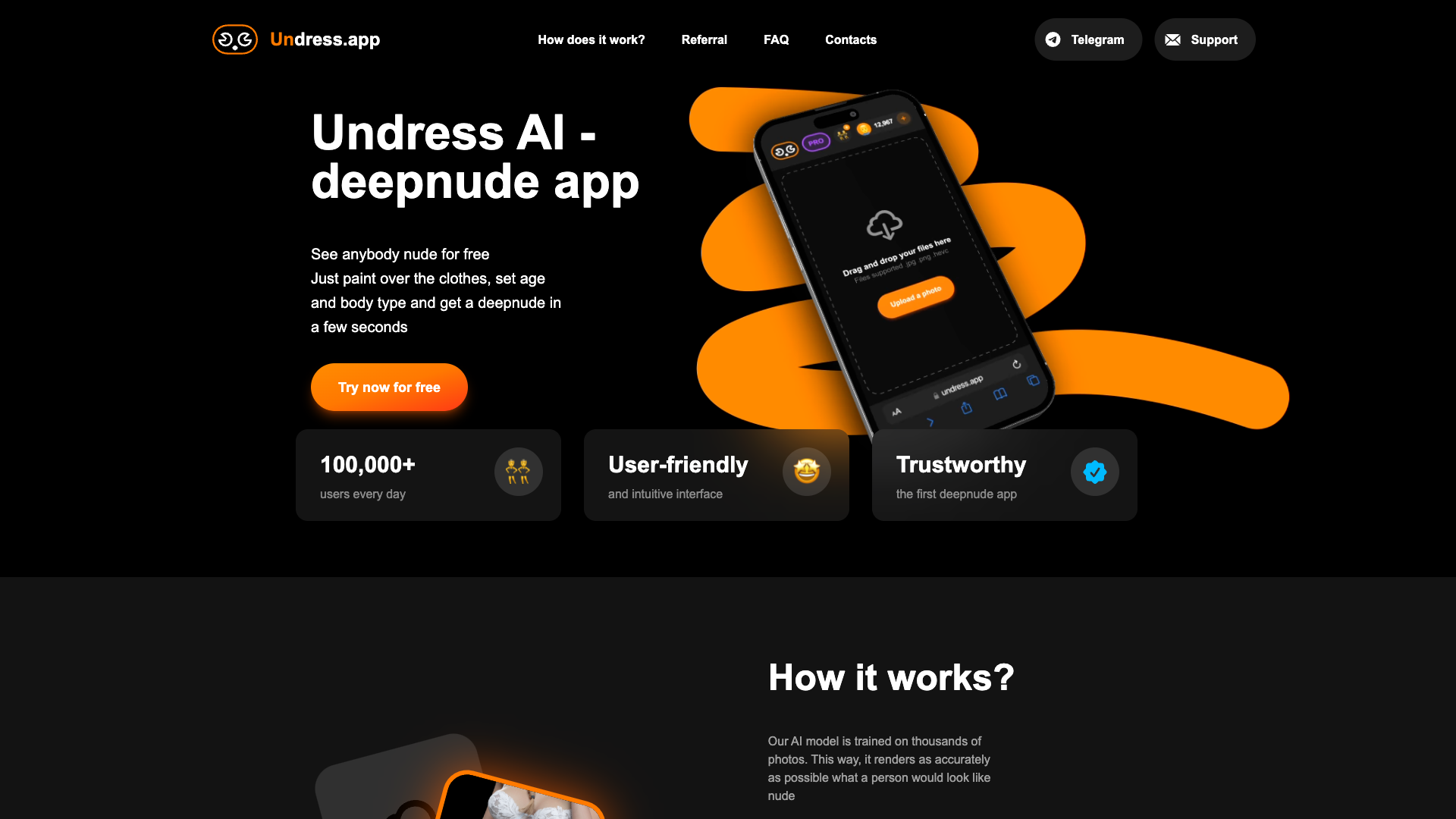

The digital realm is constantly evolving, and with it, the tools available to shape and reshape our visual experiences. Applications like Undressher, Nudify, and others are at the forefront of this evolution, offering users the ability to seemingly undress individuals in photographs with alarming ease. These platforms, powered by advanced AI, machine learning, and sophisticated image recognition algorithms, promise a streamlined process: upload an image, and the technology does the rest. The allure of instant transformation, the promise of realistic results, and the accessibility of free or low-cost options have fueled their popularity, but at what cost? The very ease of use, the "simplicity and ease" designed into these interfaces, belies the complex ethical concerns they raise.

| Feature | Details |

|---|---|

| Name of Technology | Undress AI (Generic term for clothing removal applications) |

| Primary Function | Digitally remove clothing from images using AI and machine learning. |

| Underlying Technology | Advanced image recognition, machine learning algorithms, and neural networks. |

| Key Features | Automated clothing removal, potential for realistic results, varying levels of user customization. |

| User Interface | Typically involves image upload, often with options for adjustments like style and effects. |

| Ethical Concerns | Potential for non-consensual creation of explicit content, misuse for harassment, defamation, and privacy violations. |

| Data Security | Varies; encryption used by some to protect user data, though data storage practices may differ. |

| Pricing Model | Often offers free and premium (paid) versions; premium versions may unlock advanced features, tokens, or higher processing capacity. |

| Examples of Applications | Undressher, Nudify, and similar platforms. |

| Industry Impact | Revolutionizing digital image editing, creating new opportunities while also raising significant ethical issues. |

| Reference | Wikipedia: Deepfake (Provides context on AI image manipulation). |

The creators of these applications often highlight the "simplicity" of their interfaces, emphasizing the ease with which users can upload a photo and achieve the desired result. The Undressher app, for instance, is specifically "designed for simplicity and ease of use, ensuring seamless navigation for everyone, regardless of technical prowess." This focus on user-friendliness is a common marketing strategy, making these tools accessible to a wide audience. The promise is clear: "Just upload a photo and get it undressed!" The Undress AI technology analyzes and modifies the image, aiming to create a "seamless transformation" that yields a convincingly altered visual outcome. The process generally involves choosing an image, letting the AI do its work, and then saving the "transformed photo." Undressher, for its part, assures users that it employs encryption technology to safeguard user data, and claims that the images are not stored on their servers after processing.

The functionality, at its core, relies on sophisticated algorithms and machine learning. These "advanced AI algorithms" are trained on vast datasets of images, enabling them to "analyze and modify the image to virtually undress the person." The aim is to produce results that are not only quick but also "realistic" and "remarkably accurate." This level of technological sophistication, however, comes with a shadow. The very power of these tools raises complex questions about consent, privacy, and the potential for misuse. The "sophisticated algorithms ensure every detail is preserved," but this precision, when applied to manipulating a person's image without their consent, morphs into a tool with the capacity for significant harm.

Consider the inherent vulnerabilities. If an image of a person is publicly available online, it could potentially be subjected to this type of manipulation. The lack of control over the dissemination of such altered images can lead to situations where individuals find themselves the unwilling subjects of digitally created explicit content. The implications extend beyond personal embarrassment; they can encompass harassment, reputational damage, and psychological distress. Moreover, the availability of these technologies makes it easier to create "deepfakes," where a person is depicted in situations they never experienced, potentially leading to the spread of misinformation and malicious intent.

The commercial aspect of this technology is also worth examining. The Undressher app, for example, has a "premium plan" that starts at a monthly fee for a limited number of "tokens," presumably for processing images. This monetization strategy is consistent with how many tech platforms operate: providing a basic service for free to attract users, then charging for advanced features or increased usage. However, the commodification of this technology, especially given its potential for harm, introduces additional ethical considerations. Is there a point where the profit motive outweighs the potential risks? What responsibilities do the developers of these applications have to ensure their tools are not used for malicious purposes?

The claims made by the developers of these AI-powered tools must be scrutinized. Phrases like "start generating realistic results quickly and safely" and "the best free AI tool for realistic results" often oversimplify the complexities and risks involved. While the technology itself might be impressive, the "simplicity" it offers can be deceptive. The statement "it's a great way to undress any person online" is particularly troubling, as it normalizes the act of digitally stripping someone's clothes without their knowledge or consent. The use of terms such as "nudify online" and "get nudes in seconds with undress AI technology" further highlights the potential for this technology to be used for creating explicit content without the subjects consent.

The allure of these applications lies partly in the novelty and the excitement surrounding AI technology. The phrase, "AI clothing remover tool is revolutionizing digital image editing, powered by advanced AI technology," encapsulates this fascination. The "leading innovation" often comes with a price, with the potential for misuse far outweighing the legitimate uses. In this landscape, where advanced algorithms meet intuitive interfaces, the ethical ramifications demand rigorous examination. The "stunning realism and remarkable accuracy" that these apps strive to achieve are not neutral. They are, instead, tools with the potential to inflict significant harm on individuals and society, making the conversation around their ethical application and regulation more crucial than ever.

While some may argue that these tools can be used for artistic purposes or in specific research contexts, the overwhelming risk of misuse cannot be ignored. The ease with which images can be altered, combined with the potential for widespread dissemination, creates a dangerous environment. This requires a multifaceted approach involving both technological safeguards and stricter legal frameworks. Developers should prioritize ethical considerations in the design and deployment of these applications, incorporating measures to prevent non-consensual use and providing robust reporting mechanisms for victims. Legal systems must adapt to address the unique challenges posed by this technology, including enacting strong privacy laws and establishing clear guidelines regarding the creation and distribution of manipulated images.

Finally, the need for widespread public education is paramount. The average person must be educated about the capabilities of AI image manipulation and the risks associated with it. This includes promoting media literacy, teaching individuals how to identify deepfakes, and fostering a culture of consent and respect online. Without this collective understanding, the potential for harm from these technologies will only continue to grow. The Undress AI applications highlight a point: the future of digital image manipulation and, by extension, online privacy and consent, hinges on our collective ability to navigate this complex and evolving landscape responsibly.